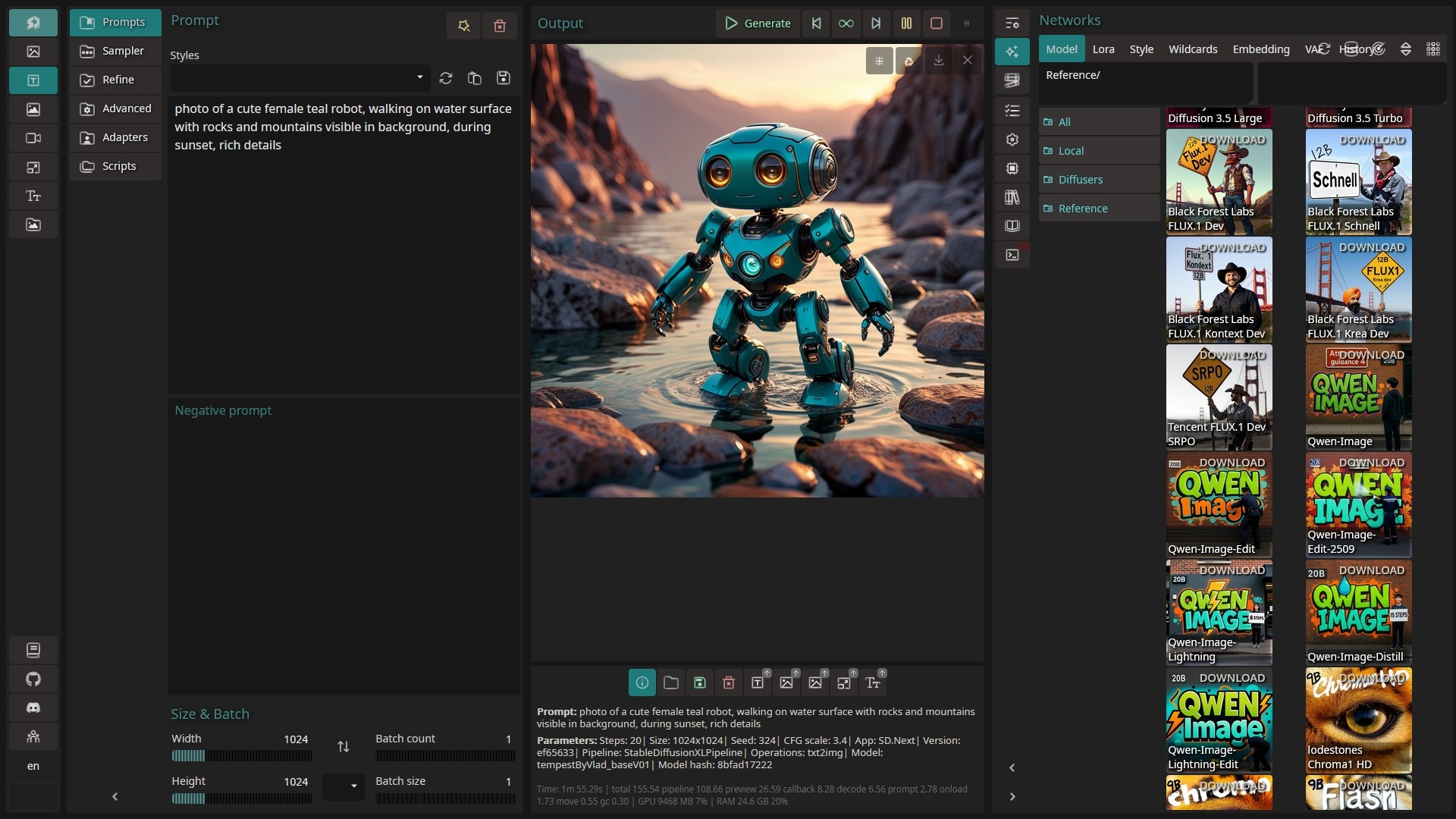

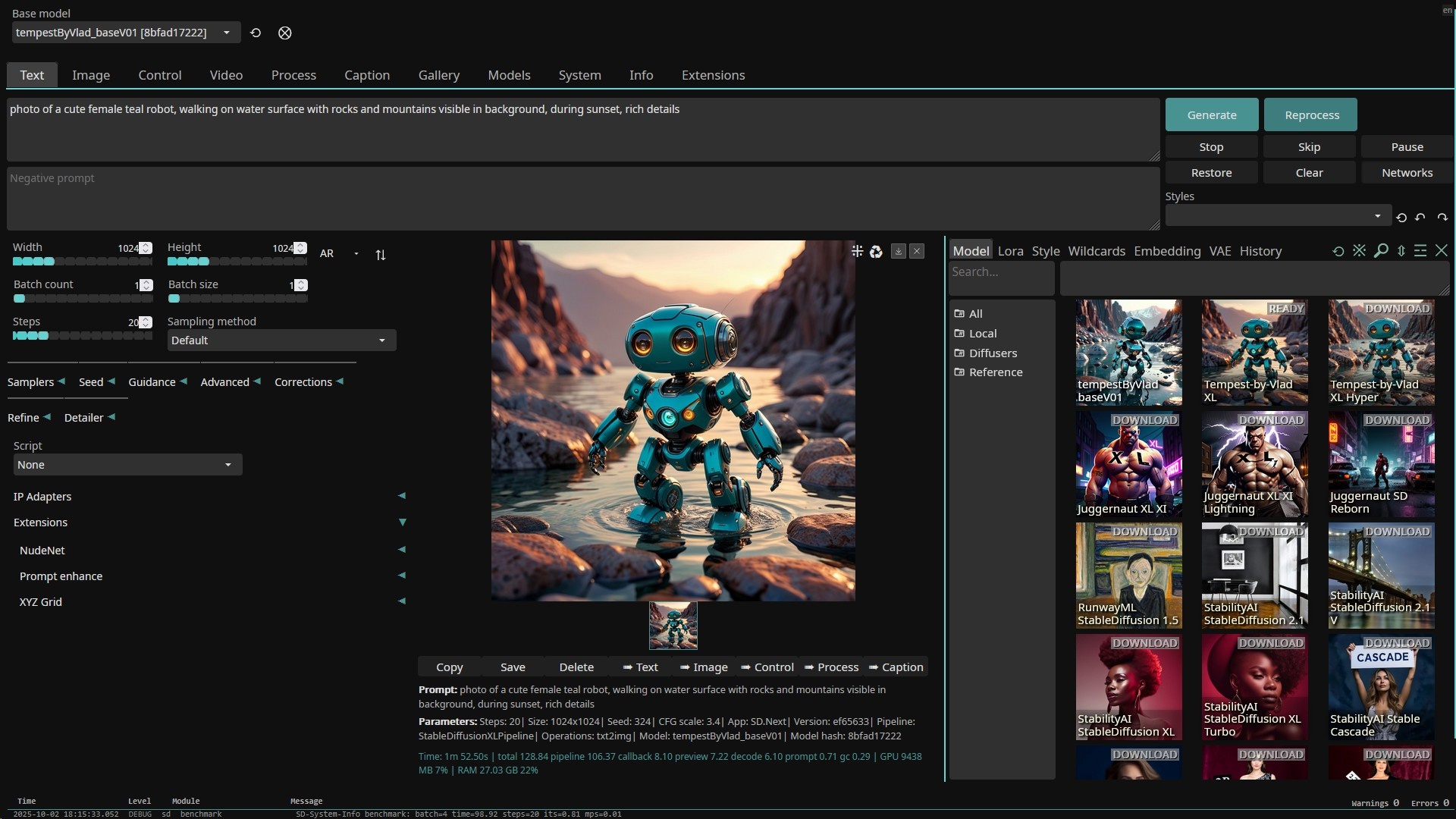

Modern UI

Classic UI

IPEX (Intel PyTorch EXtension) version of SD.Next

Some prebuild docker images https://hub.docker.com/r/liutyi/sdnext-ipex/tags

See generation examples for all models https://vladmandic.github.io/sd-samples/compare.html

| Children Display |

|---|

SD.Next openvino setup for Intel Core i7 1355U with Intel Xe iGPU

...

| Code Block |

|---|

services: sdnext-ipex: build: dockerfile: Dockerfile image: liutyi/sdnext-ipex:latest25.9.1-igpu container_name: sdnext-ipex restart: unless-stopped devices: - /dev/dri:/dev/dri # - /dev/accel:/dev/accel environment: - HF_DEBUG=True ports: - 7860:7860 volumes: - /docker/sdnext/app-volume:/app - /docker/sdnext/mnt-volume:/mnt - /docker/sdnext/huggingfacehf-volume:/root/.cache/huggingface - /docker/sdnext/python-volume:/usr/local/lib/python3.10 # - /dev/shm:/dev/shm |

Dockerfile

...

| Code Block |

|---|

# SD.Next IPEX Dockerfile # docs: <https://github.com/vladmandic/sdnext/wiki/Docker> # base image FROM ubuntu:noble # metadata LABEL org.opencontainers.image.vendor="SD.Next" LABEL org.opencontainers.image.authors="disty0liutyi" LABEL org.opencontainers.image.url="https://github.com/vladmandicliutyi/sdnext/" LABEL org.opencontainers.image.documentation="https://github.com/vladmandic/sdnext/wiki/Docker" LABEL org.opencontainers.image.source="https://github.com/vladmandic/sdnext/" LABEL org.opencontainers.image.licenses="AGPL-3.0" LABEL org.opencontainers.image.title="SD.Next IPEX" LABEL org.opencontainers.image.description="SD.Next: Advanced Implementation of Stable Diffusion and other Diffusion-based generative image models" LABEL org.opencontainers.image.base.name="https://hub.docker.com/_/ubuntu:noble" LABEL org.opencontainers.image.version="latest25.9.0" # essentials RUN apt-get update && \ apt-get install -y --no-install-recommends --fix-missing \ software-properties-common \ build-essential \ ca-certificates \ wget \ gpg \ git # python3.12 RUN apt-get install -y --no-install-recommends --fix-missing \ libgomp1 \ python3 \ python3-dev libtbb12 # NPU RUN wget https://github.com/intel/linux-npu-driver/releases/download/v1.23.0/linux-npu-driver-v1.23.0.20250827-17270089246-ubuntu2404.tar.gz &&\ python3-venv tar -xf linux-npu-driver-v1.23.0.20250827-17270089246-ubuntu2404.tar.gz &&\ dpkg python3-pip # Install Python 3.13 from the deadsnakes PPA #RUN add-apt-repository ppa:deadsnakes/ppa && \ # apt-get update && \ # -i *.deb &&\ rm -f linux-npu-driver-v1.23.0.20250827-17270089246-ubuntu2404.tar.gz intel-* # required by pytorch / ipex RUN apt-get install -y python3.13 python3.13-dev python3-pip python3-venv # intel compute runtime RUN https_proxy=http://proxy.example.com:18080/ wget -qO - https://repositories.intel.com/gpu/intel-graphics.key | gpg --yes --dearmor --output /usr/share/keyrings/intel-graphics.gpg RUN echo "deb [arch=amd64,i386 signed-by=/usr/share/keyrings/intel-graphics.gpg] https://repositories.intel.com/gpu/ubuntu noble client" | tee /etc/apt/sources.list.d/intel-gpu-noble.list RUN https_proxy=http://proxy.example.com:18080/ apt-get update RUN https_proxy=http://proxy.example.com:18080/--no-install-recommends --fix-missing \ libgl1 \ libglib2.0-0 # python3.12 RUN apt-get install -y --no-install-recommends --fix-missing \ python3 \ python3-dev \ python3-venv \ python3-pip # intel compute runtime RUN add-apt-repository ppa:kobuk-team/intel-graphics RUN apt-get update RUN apt-get install -y --no-install-recommends --fix-missing \ intel-opencl-icd \ libze-intel-gpu1 \ libze1 # required by pytorch / ipexcleanup RUN https_proxy=http://proxy.example.com:18080/usr/sbin/ldconfig RUN apt-get installclean -y --no-install-recommends --fix-missing \ libgl1 \ libglib2.0-0 \ libgomp1 # jemalloc is not required but it is highly recommended (also used with optional ipexrun) RUN https_proxy=http://proxy.example.com:18080/ apt-get install -y --no-install-recommends --fix-missing libjemalloc-dev ENV LD_PRELOAD=libjemalloc.so.2 # cleanup RUN /usr/sbin/ldconfig RUN https_proxy=http://proxy.example.com:18080/ apt-get clean && rm -rf /var/lib/apt/lists/* # stop pip and uv from caching ENV PIP_NO_CACHE_DIR=true ENV UV_NO_CACHE=true # set paths to use with sdnext ENV SD_DOCKER=true ENV SD_DATADIR="/mnt/data" ENV SD_MODELSDIR="/mnt/models" ENV venv_dir="/mnt/python/venv" # paths used by sdnext can be a volume if necessary #VOLUME [ "/app" ] #VOLUME [ "/mnt/data" ] #VOLUME [ "/mnt/models" ] #VOLUME [ "/mnt/python" ] #VOLUME [ "/root/.cache/huggingface" ] # intel specific environment variables ENV IPEX_SDPA_SLICE_TRIGGER_RATE=1 ENV IPEX_ATTENTION_SLICE_RATE=0.5 ENV IPEX_FORCE_ATTENTION_SLICE=-1 ENV IPEXRUN=True # git clone and run sdnext RUN echo '#!/bin/bash\ngit status || git clone https://github.com/vladmandic/sdnext.git -b dev .\n/app/webui.sh "$@"' | tee /bin/startup.sh RUN chmod 755 /bin/startup.sh # actually run sdnext WORKDIR /app ENTRYPOINT [ "startup.sh", "-f", "--use-ipex", "--uv", "--listen", "--insecure", "--share", "--server-name sdnext.example.com", "--update", "--debug", "--api-log", "--log", "sdnext.log" ] #ENTRYPOINT [ "startup.sh", "-f", "--use-ipex", "--uv", "--listen", "--debug", "--api-log", "--log", "sdnext.log" ] # expose port EXPOSE 7860 # healthcheck function #HEALTHCHECK --interval=60s --timeout=10s --start-period=60s --retries=3 CMD curl --fail http://localhost:7860/sdapi/v1/status || exit 1 # stop signal STOPSIGNAL SIGINT |

start/stop commands

| Code Block |

|---|

docker compose up -d

docker compose down --rmi local |

nginx config

/etc/nginx/sites-enabled/sdnext.conf

&& rm -rf /var/lib/apt/lists/*

# set paths to use with sdnext

ENV SD_DOCKER=true

ENV SD_VAE_DEBUG=true

ENV SD_DATADIR="/mnt/data"

ENV SD_MODELSDIR="/mnt/models"

ENV venv_dir="/mnt/python/venv"

ENV IPEX_SDPA_SLICE_TRIGGER_RATE=1

ENV IPEX_ATTENTION_SLICE_RATE=0.5

ENV IPEX_FORCE_ATTENTION_SLICE=1

ENV IPEXRUN=True

# git clone and run sdnext

# RUN echo '#!/bin/bash\ngit status || git clone https://github.com/vladmandic/sdnext.git -b dev .\n/app/webui.sh "$@"' | tee /bin/startup.sh

RUN echo '#!/bin/bash\ngit status || git clone https://github.com/liutyi/sdnext.git -b ipex .\n/app/webui.sh "$@"' | tee /bin/startup.sh

RUN chmod 755 /bin/startup.sh

# actually run sdnext

WORKDIR /app

ENTRYPOINT [ "startup.sh", "-f", "--use-ipex", "--uv", "--listen", "--insecure", "--api-log", "--log", "sdnext.log" ]

# expose port

EXPOSE 7860

# healthcheck function

#HEALTHCHECK --interval=60s --timeout=10s --start-period=60s --retries=3 CMD curl --fail http://localhost:7860/sdapi/v1/status || exit 1

# stop signal

STOPSIGNAL SIGINT |

start/stop commands

| Code Block |

|---|

docker compose up -d

docker compose down --rmi local |

nginx config

/etc/nginx/sites-enabled/sdnext.conf

| Code Block |

|---|

server {

listen 80;

server_name sdnext.example.com;

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl;

server_name sdnext.example.com;

# SSL Settings

ssl_certificate /etc/ssl/private/wildcard.pem;

ssl_certificate_key /etc/ssl/private/wildcard.pem;

ssl_trusted_certificate "/etc/ssl/certs/wildcard.ca"; |

| Code Block |

server { listen 80add_header Strict-Transport-Security 'max-age=15552000; includeSubDomains'; serverssl_nameprotocols sdnextTLSv1.example3 TLSv1.com2; return 301 https://$server_name$request_uri; } server { listen 443 sslssl_ciphers "EECDH+ECDSA+AESGCM EECDH+aRSA+AESGCM EECDH+ECDSA+SHA384 EECDH+ECDSA+SHA256 EECDH+aRSA+SHA384 EECDH+aRSA+SHA256 EECDH+aRSA+RC4 EECDH EDH+aRSA HIGH !RC4 !aNULL !eNULL !LOW !3DES !MD5 !EXP !PSK !SRP !DSS"; serverssl_session_name sdnext.example.comcache shared:SSL:20m; # SSL Settingsssl_session_timeout 1h; ssl_prefer_certificate /etc/ssl/private/wildcard.pemserver_ciphers on; ssl_certificate_key /etc/ssl/private/wildcard.pemstapling on; ssl_trustedstapling_certificate "/etc/ssl/certs/wildcard.ca";verify on; add_header Strict-Transport-Security 'max-age=15552000; includeSubDomains'# Timeouts client_max_body_size 50G; sslclient_body_protocols TLSv1.3 TLSv1.2timeout 600s; ssl_ciphers "EECDH+ECDSA+AESGCM EECDH+aRSA+AESGCM EECDH+ECDSA+SHA384 EECDH+ECDSA+SHA256 EECDH+aRSA+SHA384 EECDH+aRSA+SHA256 EECDH+aRSA+RC4 EECDH EDH+aRSA HIGH !RC4 !aNULL !eNULL !LOW !3DES !MD5 !EXP !PSK !SRP !DSS"; proxy_read_timeout 600s; proxy_send_timeout 600s; send_timeout ssl_session_cache shared:SSL:20m600s; ssl_session_timeout 1h;# Set headers sslproxy_preferset_server_ciphers on; header Host ssl_stapling on; ssl_stapling_verify on$http_host; # Timeouts proxy_set_header X-Real-IP client_max_body_size 50G$remote_addr; clientproxy_body_timeout 600sset_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_readset_timeoutheader X-Forwarded-Proto 600s$scheme; proxy_send_timeout# enable 600s;websockets sendproxy_http_timeoutversion 1.1; 600s; proxy_set_header Upgrade # Set headers$http_upgrade; proxy_set_header Host Connection "upgrade"; proxy_redirect $http_hostoff; # DNS proxy_set_header X-Real-IP resolver 1.1.1.1 $remote_addr8.8.4.4 valid=300s; proxyresolver_set_header X-Forwarded-For $proxy_add_x_forwarded_for; timeout 5s; proxy_set_header X-Forwarded-Proto $scheme;location /file=/mnt/data { # enable websocketsalias /docker/sdnext/mnt-volume/data; proxy_http_version 1.1;} proxy_set_headerlocation / { Upgrade $http_upgrade; proxy_set_header Connection "upgrade"pass http://127.0.0.1:7860; proxy_redirect off; # DNS resolver 1.1.1.1 8.8.4.4 valid=300s; resolver_timeout 5s; location /file=/mnt/data { alias /} } |

config.json

/docker/sdnext/mnt-volume/data/config.json

| Code Block |

|---|

{; } location / { proxy_pass http://127.0.0.1:7860; } } |

config.json

/docker/sdnext/mnt-volume/data/config.json| Code Block |

|---|

{ "sd_model_checkpoint": "Diffusers/imnotednamode/Chroma-v36-dc-diffusers [38ce7ce7f3]"sd_model_checkpoint": "Diffusers/imnotednamode/Chroma-v36-dc-diffusers [38ce7ce7f3]", "outdir_txt2img_samples": "/mnt/data/outputs/text", "outdir_img2img_samples": "/mnt/data/outputs/image", "outdir_control_samples": "/mnt/data/outputs/control", "outdir_extras_samples": "/mnt/data/outputs/extras", "outdir_save": "/mnt/data/outputs/save", "outdir_txt2img_samplesvideo": "/mnt/data/outputs/textvideo", "outdir_img2imginit_samplesimages": "/mnt/data/outputs/imageinit-images", "outdir_controltxt2img_samplesgrids": "/mnt/data/outputs/controlgrids", "outdir_extrasimg2img_samplesgrids": "/mnt/data/outputs/extrasgrids", "outdir_savecontrol_grids": "/mnt/data/outputs/savegrids", "outdirdiffusers_videoversion": "/mnt/data/outputs/video8adc6003ba4dbf5b61bb4f1ce571e9e55e145a99", "outdirsd_initcheckpoint_imageshash": "/mnt/data/outputs/init-images"null, "outdirhuggingface_txt2img_gridstoken": "/mnt/data/outputs/grids", "outdir_img2img_grids": "/mnt/data/outputs/grids"hf_PUT-HERE-YOUR-HuggingFace-Token", "outdirsamples_controlfilename_gridspattern": "/mnt/data/outputs/grids", "diffusers_version": "8adc6003ba4dbf5b61bb4f1ce571e9e55e145a99", "sd_checkpoint_hash": null, "huggingface_token": "hf_PUT-HERE-YOUR-HuggingFace-Token" }[seq]-[date]-[model_name]-[width]x[height]-STEP[steps]-CFG[cfg]-Seed[seed]" } |

UI ui config

/docker/sdnext/mnt-volume/data/ui-config.json

...

https://huggingface.co/settings/tokens

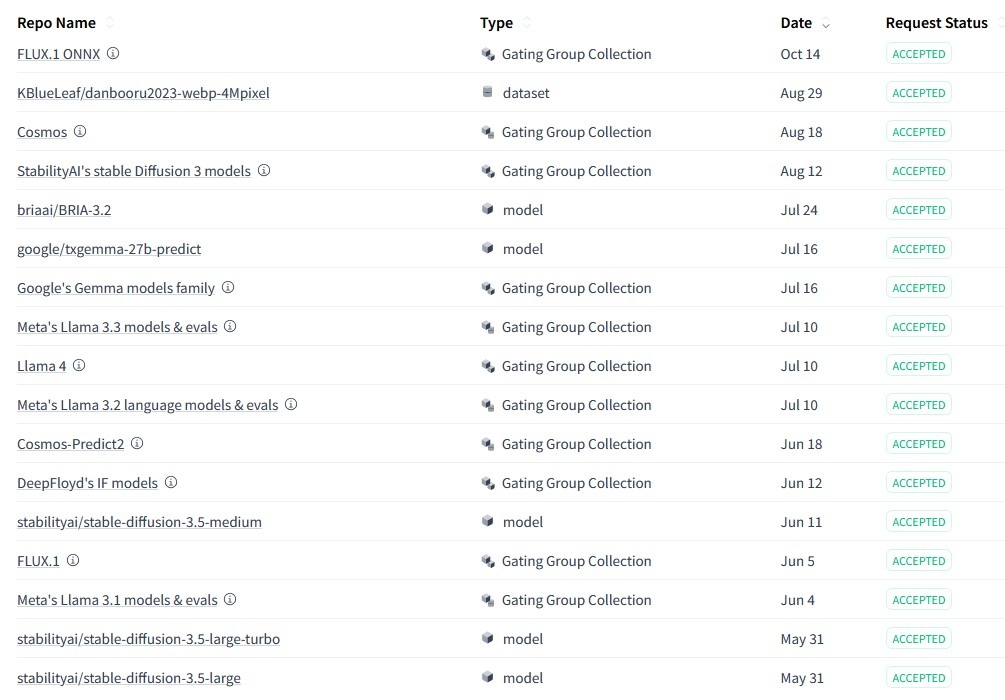

Repo with

...

access request needed

https://huggingface.co/meta-llama/Llama-3.1-8B-Instruct

https://huggingface.co/meta-llama/Llama-3.32-70B-Instruct3B

https://huggingface.co/stabilityai/stable-diffusionmeta-llama/Llama-3.53-70B-largeInstruct

https://huggingface.co/black-forest-labs/FLUX.1-dev

Error example

In case you got repo access, enter tokens to WebUI and still got error like

| Code Block |

|---|

2025-06-20 13:25:36,995 | 97698f11112d | sd | ERROR | sd_models | Load model: path="/mnt/models/Diffusers/models--HiDream-ai--HiDream-I1-Dev/snapshots/5b3f48f0d64d039cd5e4b6bd47b4f4e0cbebae

62" 401 Client Error. (Request ID: Root=1-68556150-3f4018045ca5c71e00c09f88;3ae0ae6d-465e-46ed-9934-c5ea6af2097d)

Cannot access gated repo for url https://huggingface.co/api/models/meta-llama/Meta-Llama-3.1-8B-Instruct/auth-check.

Access to model meta-llama/Llama-3.1-8B-Instruct is restricted. You must have access to it and be authenticated to access it. Please log in.

2025-06-20 13:25:37,786 | 97698f11112d | sd | ERROR | sd_models | StableDiffusionPipeline: Pipeline <class 'diffusers.pipelines.stable_diffusion.pipeline_stable_diffusion.StableDiffusionPipeline'> expected ['feature_extractor', 'image_encoder', |

Manual login

workaround is to login manually

| Code Block |

|---|

docker exec -it sdnext-ipex bash |

inside the container

| Code Block |

|---|

export venv_dir=/mnt/python/venv/

source "${venv_dir}"/bin/activate

/mnt/python/venv/bin/huggingface-cli login |

https://huggingface.co/google/gemma-7b-it

https://huggingface.co/DeepFloyd/IF-I-XL-v1.0

https://huggingface.co/briaai/BRIA-3.2

https://huggingface.co/stabilityai/stable-diffusion-3-medium

https://huggingface.co/stabilityai/stable-diffusion-3.5-large

https://huggingface.co/black-forest-labs/FLUX.1-dev

https://huggingface.co/nvidia/Cosmos-Predict2-14B-Text2Image

Error example

In case you got repo access, enter tokens to WebUI and still got error like

| Code Block |

|---|

2025-06-20 13:25:36,995 | 97698f11112d | sd | ERROR | sd_models | Load model: path="/mnt/models/Diffusers/models--HiDream-ai--HiDream-I1-Dev/snapshots/5b3f48f0d64d039cd5e4b6bd47b4f4e0cbebae

62" 401 Client Error. (Request ID: Root=1-68556150-3f4018045ca5c71e00c09f88;3ae0ae6d-465e-46ed-9934-c5ea6af2097d)

Cannot access gated repo for url https://huggingface.co/api/models/meta-llama/Meta-Llama-3.1-8B-Instruct/auth-check.

Access to model meta-llama/Llama-3.1-8B-Instruct is restricted. You must have access to it and be authenticated to access it. Please log in.

2025-06-20 13:25:37,786 | 97698f11112d | sd | ERROR | sd_models | StableDiffusionPipeline: Pipeline <class 'diffusers.pipelines.stable_diffusion.pipeline_stable_diffusion.StableDiffusionPipeline'> expected ['feature_extractor', 'image_encoder', |

Manual login

workaround is to login manually

| Code Block |

|---|

docker exec -it sdnext-ipex bash |

inside the container

| Code Block |

|---|

export venv_dir=/mnt/python/venv/

source "${venv_dir}"/bin/activate

git config --global credential.helper store

/mnt/python/venv/bin/huggingface-cli login |

| Code Block |

|---|

(venv) root@97698f11112d:/app# /mnt/python/venv/bin/huggingface-cli login

_| _| _| _| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _|_|_|_| _|_| _|_|_| _|_|_|_|

_| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_|_|_|_| _| _| _| _|_| _| _|_| _| _| _| _| _| _|_| _|_|_| _|_|_|_| _| _|_|_|

_| _| _| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_| _| _|_| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _| _| _| _|_|_| _|_|_|_|

A token is already saved on your machine. Run `huggingface-cli whoami` to get more information or `huggingface-cli logout` if you want to log out.

Setting a new token will erase the existing one.

To log in, `huggingface_hub` requires a token generated from https://huggingface.co/settings/tokens .

Enter your token (input will not be visible): PUT HERE YOUR hf_.............

Add token as git credential? (Y/n) y

Token is valid (permission: write).

The token `SDNext` has been saved to /root/.cache/huggingface/stored_tokens

Cannot authenticate through git-credential as no helper is defined on your machine.

You might have to re-authenticate when pushing to the Hugging Face Hub.

Run the following command in your terminal in case you want to set the 'store' credential helper as default.

git config --global credential.helper store

Read https://git-scm.com/book/en/v2/Git-Tools-Credential-Storage for more details.

Token has not been saved to git credential helper.

Your token has been saved to /root/.cache/huggingface/token

Login successful.

The current active token is: `SDNext` |

First start console output example without ipex

| Code Block |

|---|

root@server6:~/ollama-cpu-docker# docker compose up -d; docker logs --since 1h --follow sdnext-ipex;

[+] Running 2/2

✔ Network ollama-cpu-docker_default Created 0.0s

✔ Container sdnext-ipex Started 2.0s

fatal: not a git repository (or any parent up to mount point /)

Stopping at filesystem boundary (GIT_DISCOVERY_ACROSS_FILESYSTEM not set).

Cloning into '.'...

Create python venv

Activate python venv: /mnt/python/venv

Launch: /mnt/python/venv/bin/python3

14:19:52-182833 INFO Starting SD.Next

14:19:52-184379 INFO Logger: file="/app/sdnext.log" level=DEBUG

host="64f02122b05d" size=79 mode=append

14:19:52-184959 INFO Python: version=3.12.3 platform=Linux

bin="/mnt/python/venv/bin/python3"

venv="/mnt/python/venv"

14:19:52-192953 INFO Version: app=sd.next updated=2025-10-02 hash=ef6563376

branch=ipex

url=https://github.com/liutyi/sdnext.git/tree/ipex

ui=main

14:19:52-193606 DEBUG Branch mismatch: sdnext=ipex ui=main

14:19:52-203815 DEBUG Branch synchronized: ipex

14:19:52-346183 ERROR Repository failed to check version: 'commit'

{'message': "API rate limit exceeded for xx.xx.xx.xx.

(But here's the good news: Authenticated requests get a

higher rate limit. Check out the documentation for more

details.)", 'documentation_url':

'https://docs.github.com/rest/overview/resources-in-the

-rest-api#rate-limiting'}

14:19:52-347865 INFO Platform: arch=x86_64 cpu=x86_64 system=Linux

release=6.14.0-32-generic python=3.12.3 locale=('C',

'UTF-8') docker=True

14:19:52-348559 DEBUG Packages: prefix=../mnt/python/venv

site=['../mnt/python/venv/lib/python3.12/site-packages'

]

14:19:52-349049 INFO Args: ['-f', '--use-ipex', '--uv', '--listen',

'--insecure', '--api-log', '--log', 'sdnext.log']

14:19:52-349520 DEBUG Setting environment tuning

14:19:52-349915 DEBUG Torch allocator:

"garbage_collection_threshold:0.80,max_split_size_mb:51

2"

14:19:52-350367 DEBUG Install: package="uv" install required

14:19:53-743978 DEBUG Install: package="gradio" install required

14:20:00-808790 INFO Verifying torch installation

14:20:00-813464 DEBUG Torch overrides: cuda=False rocm=False ipex=True

directml=False openvino=False zluda=False

14:20:00-814098 INFO IPEX: Intel OneAPI toolkit detected

14:20:00-814494 DEBUG Install: package="torch" install required

14:20:00-814888 DEBUG Install: package="torchvision" install required

14:20:00-815284 DEBUG Install: package="torchaudio" install required

14:20:00-815677 DEBUG Install: package="xpu" install required

14:20:15-778575 INFO Torch detected: gpu="Intel(R) Arc(TM) Graphics"

vram=128339 units=128

14:20:15-779442 DEBUG Install: package="onnx" install required

14:20:17-278421 DEBUG Install: package="onnxruntime" install required

14:20:18-355326 INFO Transformers install: version=4.56.2

14:20:27-151710 INFO Diffusers install:

commit=64a5187d96f9376c7cf5123db810f2d2da79d7d0

14:20:38-296481 INFO Install requirements: this may take a while...

14:20:38-808596 WARNING Install: cmd="uv pip" args="install -r

requirements.txt" cannot use uv, fallback to pip

14:21:25-608112 INFO Install: verifying requirements

14:21:30-096095 INFO Startup: standard

14:21:30-096669 INFO Verifying submodules

14:21:43-290978 DEBUG Git detached head detected:

folder="extensions-builtin/sd-extension-chainner"

reattach=main

14:21:43-291873 DEBUG Git submodule: extensions-builtin/sd-extension-chainner

/ main

14:21:43-300854 DEBUG Git detached head detected:

folder="extensions-builtin/sd-extension-system-info"

reattach=main

14:21:43-301522 DEBUG Git submodule:

extensions-builtin/sd-extension-system-info / main

14:21:43-308227 DEBUG Git detached head detected:

folder="extensions-builtin/sdnext-modernui"

reattach=main

14:21:43-308820 DEBUG Git submodule: extensions-builtin/sdnext-modernui /

main

14:21:43-325661 DEBUG Git detached head detected:

folder="extensions-builtin/stable-diffusion-webui-rembg

" reattach=master

14:21:43-326569 DEBUG Git submodule:

extensions-builtin/stable-diffusion-webui-rembg /

master

14:21:43-336593 DEBUG Git detached head detected: folder="wiki"

reattach=master

14:21:43-337511 DEBUG Git submodule: wiki / master

14:21:43-364999 DEBUG Installed packages: 174

14:21:43-365576 DEBUG Extensions all: ['sd-extension-chainner',

'sd-extension-system-info', 'sdnext-modernui',

'stable-diffusion-webui-rembg']

14:21:43-394140 DEBUG Extension installer: builtin=True

file="/app/extensions-builtin/stable-diffusion-webui-re

mbg/install.py"

14:21:46-940113 INFO Extension installed packages:

stable-diffusion-webui-rembg

['opencv-python-headless==4.12.0.88', 'pooch==1.8.2',

'pymatting==1.1.14', 'rembg==2.0.67']

14:21:46-941087 INFO Extensions enabled: ['sd-extension-chainner',

'sd-extension-system-info', 'sdnext-modernui',

'stable-diffusion-webui-rembg']

14:21:46-952833 INFO Install: verifying requirements

14:21:46-955116 DEBUG Setup complete without errors: 1759414907

14:21:46-957523 DEBUG Extension preload: {'extensions-builtin': 0.0,

'/mnt/data/extensions': 0.0}

14:21:46-958387 INFO Installer time: total=277.19 pip=102.69

requirements=47.81 install=36.22 torch=14.97 ipex=13.36

git=13.34 submodules=13.25 diffusers=11.05

transformers=8.80 base=5.17 packages=4.00

stable-diffusion-webui-rembg=3.55 onnx=2.58 latest=0.16

files=0.10 branch=0.06

14:21:46-959364 INFO Command line args: ['-f', '--use-ipex', '--uv',

'--listen', '--insecure', '--api-log', '--log',

'sdnext.log'] f=True uv=True use_ipex=True

insecure=True listen=True log=sdnext.log args=[]

14:21:46-960057 DEBUG Env flags: ['SD_VAE_DEBUG=true', 'SD_DOCKER=true',

'SD_DATADIR=/mnt/data', 'SD_MODELSDIR=/mnt/models']

14:21:46-960546 DEBUG Linker flags: preload="None"

path=":/mnt/python/venv/lib/"

14:21:46-961027 DEBUG Starting module: <module 'webui' from '/app/webui.py'>

14:21:52-472525 DEBUG System: cores=22 affinity=22 threads=16

14:21:52-474247 INFO Torch: torch==2.7.1+xpu torchvision==0.22.1+xpu

14:21:52-474728 INFO Packages: diffusers==0.36.0.dev0 transformers==4.56.2

accelerate==1.10.1 gradio==3.43.2 pydantic==1.10.21

numpy==2.3.3

14:21:52-850006 DEBUG ONNX: version=1.23.0,

available=['AzureExecutionProvider',

'CPUExecutionProvider']

14:21:52-920163 DEBUG State initialized: id=136709158132896

14:21:53-831664 INFO Device detect: memory=125.0 default=balanced

optimization=highvram

14:21:53-838787 DEBUG Settings: fn="/mnt/data/config.json" created

14:21:53-840641 INFO Engine: backend=Backend.DIFFUSERS compute=ipex

device=xpu attention="Scaled-Dot-Product" mode=no_grad

14:21:53-841458 DEBUG Save: file="/mnt/data/config.json" json=0 bytes=2

time=0.002

14:21:53-842603 DEBUG Read: file="html/reference.json" json=106 bytes=53223

time=0.000 fn=_call_with_frames_removed:<module>

14:21:53-843385 DEBUG Torch attention: type="sdpa" flash=True memory=True

math=True

14:21:54-347712 INFO Torch parameters: backend=ipex device=xpu config=Auto

dtype=torch.bfloat16 context=no_grad nohalf=False

nohalfvae=False upcast=False deterministic=False

tunable=[False, False] fp16=pass bf16=pass

optimization="Scaled-Dot-Product"

14:21:54-348863 INFO Device: device=Intel(R) Arc(TM) Graphics n=1 ipex=

driver=1.6.35096+9

14:21:54-394243 TRACE Trace: VAE

14:21:54-473654 DEBUG Entering start sequence

14:21:54-474208 INFO Base path: data="/mnt/data"

14:21:54-474595 INFO Base path: models="/mnt/models"

14:21:54-474996 INFO Create: folder="/mnt/models/Stable-diffusion"

14:21:54-475399 INFO Create: folder="/mnt/models/Diffusers"

14:21:54-475773 INFO Create: folder="/mnt/models/huggingface"

14:21:54-476141 INFO Create: folder="/mnt/models/VAE"

14:21:54-476530 INFO Create: folder="/mnt/models/UNET"

14:21:54-476903 INFO Create: folder="/mnt/models/Text-encoder"

14:21:54-477500 INFO Create: folder="/mnt/models/Lora"

14:21:54-477885 INFO Create: folder="/mnt/models/tunable"

14:21:54-478251 INFO Create: folder="/mnt/models/embeddings"

14:21:54-478631 INFO Create: folder="/mnt/models/ONNX/temp"

14:21:54-479037 INFO Create: folder="/mnt/data/outputs/text"

14:21:54-479422 INFO Create: folder="/mnt/data/outputs/image"

14:21:54-479796 INFO Create: folder="/mnt/data/outputs/control"

14:21:54-480201 INFO Create: folder="/mnt/data/outputs/extras"

14:21:54-480585 INFO Create: folder="/mnt/data/outputs/inputs"

14:21:54-480949 INFO Create: folder="/mnt/data/outputs/grids"

14:21:54-481343 INFO Create: folder="/mnt/data/outputs/save"

14:21:54-481711 INFO Create: folder="/mnt/data/outputs/video"

14:21:54-482341 INFO Create: folder="/mnt/models/yolo"

14:21:54-482714 INFO Create: folder="/mnt/models/wildcards"

14:21:54-483195 DEBUG Initializing

14:21:54-520191 INFO Available VAEs: path="/mnt/models/VAE" items=0

14:21:54-520799 INFO Available UNets: path="/mnt/models/UNET" items=0

14:21:54-521258 INFO Available TEs: path="/mnt/models/Text-encoder" items=0

14:21:54-521818 INFO Available Models:

safetensors="/mnt/models/Stable-diffusion":0

diffusers="/mnt/models/Diffusers":0 reference=106

items=0 time=0.00

14:21:54-534411 INFO Available LoRAs: path="/mnt/models/Lora" items=0

folders=2 time=0.00

14:21:54-539670 INFO Available Styles: path="/mnt/models/styles" items=288

time=0.00

14:21:54-541826 DEBUG Install: package="basicsr" install required

14:21:57-859249 DEBUG Install: package="gfpgan" install required

14:22:00-303207 INFO Available Detailer: path="/mnt/models/yolo" items=11

downloaded=0

14:22:00-304138 DEBUG Extensions: disabled=[]

14:22:00-304610 INFO Load extensions

14:22:00-795505 DEBUG Extensions init time: total=0.49 xyz_grid.py=0.18

stable-diffusion-webui-rembg=0.09

sd-extension-chainner=0.07

14:22:00-813362 DEBUG Read: file="html/upscalers.json" json=4 bytes=2640

time=0.000 fn=__init__:__init__

14:22:00-814058 INFO Upscaler create: folder="/mnt/models/chaiNNer"

14:22:00-814554 DEBUG Read:

file="extensions-builtin/sd-extension-chainner/models.j

son" json=25 bytes=2803 time=0.000

fn=__init__:find_scalers

14:22:00-815407 DEBUG Available chaiNNer: path="/mnt/models/chaiNNer"

defined=25 discovered=0 downloaded=0

14:22:00-816067 INFO Upscaler create: folder="/mnt/models/RealESRGAN"

14:22:00-816717 INFO Available Upscalers: items=72 downloaded=0 user=0

time=0.02 types=['None', 'Resize', 'Latent',

'AsymmetricVAE', 'DCC', 'VIPS', 'ChaiNNer', 'AuraSR',

'ESRGAN', 'RealESRGAN', 'SCUNet', 'Diffusion',

'SwinIR']

14:22:00-826361 DEBUG Install: package="hf_transfer" install required

14:22:01-364069 INFO Huggingface init: transfer=rust parallel=True

direct=False token="None"

cache="/mnt/models/huggingface"

14:22:01-364862 DEBUG Huggingface cache: path="/mnt/models/huggingface"

size=0 MB

14:22:01-365439 DEBUG UI start sequence

14:22:01-366741 INFO UI locale: name="Auto"

14:22:01-367226 INFO UI theme: type=Modern name="Default" available=35

14:22:01-367773 DEBUG UI theme:

css="extensions-builtin/sdnext-modernui/themes/Default.

css" base="['base.css', 'timesheet.css']" user="None"

14:22:01-439430 DEBUG UI initialize: tab=txt2img

14:22:01-460909 DEBUG Networks: type="model" items=104 subfolders=4

tab=txt2img folders=['/mnt/models/Stable-diffusion',

'models/Reference', '/mnt/models/Stable-diffusion']

list=0.01 thumb=0.00 desc=0.00 info=0.00 workers=12

14:22:01-462379 DEBUG Networks: type="lora" items=0 subfolders=1 tab=txt2img

folders=['/mnt/models/Lora'] list=0.01 thumb=0.01

desc=0.00 info=0.00 workers=12

14:22:01-467581 DEBUG Networks: type="style" items=288 subfolders=3

tab=txt2img folders=['/mnt/models/styles', 'html']

list=0.01 thumb=0.00 desc=0.00 info=0.00 workers=12

14:22:01-470088 DEBUG Networks: type="wildcards" items=0 subfolders=1

tab=txt2img folders=['/mnt/models/wildcards'] list=0.00

thumb=0.00 desc=0.00 info=0.00 workers=12

14:22:01-471061 DEBUG Networks: type="embedding" items=0 subfolders=1

tab=txt2img folders=['/mnt/models/embeddings']

list=0.00 thumb=0.00 desc=0.00 info=0.00 workers=12

14:22:01-472019 DEBUG Networks: type="vae" items=0 subfolders=1 tab=txt2img

folders=['/mnt/models/VAE'] list=0.00 thumb=0.00

desc=0.00 info=0.00 workers=12

14:22:01-472930 DEBUG Networks: type="history" items=0 subfolders=1

tab=txt2img folders=[] list=0.00 thumb=0.00 desc=0.00

info=0.00 workers=12

14:22:01-833528 DEBUG UI initialize: tab=img2img

14:22:01-977612 DEBUG UI initialize: tab=control models="/mnt/models/control"

14:22:02-241457 DEBUG UI initialize: tab=video

14:22:02-342434 DEBUG UI initialize: tab=process

14:22:02-370804 DEBUG UI initialize: tab=caption

14:22:02-442271 DEBUG UI initialize: tab=models

14:22:02-488111 DEBUG UI initialize: tab=gallery

14:22:02-512108 DEBUG Reading failed: /mnt/data/ui-config.json [Errno 2] No

such file or directory: '/mnt/data/ui-config.json'

14:22:02-512713 DEBUG UI initialize: tab=settings

14:22:02-515335 DEBUG Settings: sections=23 settings=371/587 quicksettings=0

14:22:02-820299 DEBUG UI initialize: tab=info

14:22:02-834256 DEBUG UI initialize: tab=extensions

14:22:02-836909 INFO Extension list is empty: refresh required

14:22:02-859799 DEBUG Extension list: processed=3 installed=3 enabled=3

disabled=0 visible=3 hidden=0

14:22:03-227231 DEBUG Save: file="/mnt/data/ui-config.json" json=0 bytes=2

time=0.000

14:22:03-269461 DEBUG Root paths: ['/app', '/mnt/data', '/mnt/models']

14:22:03-349864 INFO Local URL: http://localhost:7860/

14:22:03-350439 WARNING Public URL: enabled without authentication

14:22:03-350844 WARNING Public URL: enabled with insecure flag

14:22:03-351235 INFO External URL: http://172.19.0.2:7860

14:22:03-507766 INFO Public URL: http://xx.xx.xx.xx:7860

14:22:03-508286 INFO API docs: http://localhost:7860/docs

14:22:03-508658 INFO API redocs: http://localhost:7860/redocs

14:22:03-510130 DEBUG API middleware: [<class

'starlette.middleware.base.BaseHTTPMiddleware'>, <class

'starlette.middleware.gzip.GZipMiddleware'>]

14:22:03-510720 DEBUG API initialize

14:22:03-637255 DEBUG Scripts setup: time=0.271 ['XYZ Grid:0.031']

14:22:03-638136 DEBUG Model metadata: file="/mnt/data/metadata.json" no

changes

14:22:03-638651 INFO Model: autoload=True selected="model.safetensors"

14:22:03-639815 DEBUG Model requested: fn=threading.py:run:<lambda>

14:22:03-640847 ERROR No models found

14:22:03-641442 INFO Set system paths to use existing folders

14:22:03-642024 INFO or use --models-dir <path-to-folder> to specify base

folder with all models

14:22:03-642721 INFO or use --ckpt <path-to-checkpoint> to force using

specific model

14:22:03-643462 DEBUG Cache clear

14:22:03-644778 DEBUG Script init: ['system-info.py:app_started=0.05']

14:22:03-645267 INFO Startup time: total=376.61 launch=120.26 loader=119.98

installer=119.97 detailer=5.76 torch=4.11

libraries=2.23 gradio=0.87 cleanup=0.54 extensions=0.49

ui-defaults=0.36 ui-txt2img=0.34 ui-extensions=0.34

ui-networks=0.24 bnb=0.20 ui-control=0.19

ui-img2img=0.11 ui-models=0.10 ui-video=0.08 api=0.07

diffusers=0.07 app-started=0.05

14:22:03-648495 DEBUG Save: file="/mnt/data/config.json" json=12 bytes=618

time=0.004

14:22:09-443940 INFO API user=None code=200 http/1.1 GET /sdapi/v1/version

172.19.0.1 0.0004

14:22:09-446849 DEBUG UI: connected

14:22:11-818033 INFO API user=None code=200 http/1.1 GET /sdapi/v1/version

172.19.0.1 0.0017

14:22:11-819108 DEBUG UI: connected

|

| Code Block |

(venv) root@97698f11112d:/app# /mnt/python/venv/bin/huggingface-cli login

_| _| _| _| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _|_|_|_| _|_| _|_|_| _|_|_|_|

_| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_|_|_|_| _| _| _| _|_| _| _|_| _| _| _| _| _| _|_| _|_|_| _|_|_|_| _| _|_|_|

_| _| _| _| _| _| _| _| _| _| _|_| _| _| _| _| _| _| _|

_| _| _|_| _|_|_| _|_|_| _|_|_| _| _| _|_|_| _| _| _| _|_|_| _|_|_|_|

A token is already saved on your machine. Run `huggingface-cli whoami` to get more information or `huggingface-cli logout` if you want to log out.

Setting a new token will erase the existing one.

To log in, `huggingface_hub` requires a token generated from https://huggingface.co/settings/tokens .

Enter your token (input will not be visible): PUT HERE YOUR hf_.............

Add token as git credential? (Y/n) y

Token is valid (permission: write).

The token `SDNext` has been saved to /root/.cache/huggingface/stored_tokens

Cannot authenticate through git-credential as no helper is defined on your machine.

You might have to re-authenticate when pushing to the Hugging Face Hub.

Run the following command in your terminal in case you want to set the 'store' credential helper as default.

git config --global credential.helper store

Read https://git-scm.com/book/en/v2/Git-Tools-Credential-Storage for more details.

Token has not been saved to git credential helper.

Your token has been saved to /root/.cache/huggingface/token

Login successful.

The current active token is: `SDNext` |